Emotion Recognition Using A Transformer-Based Model

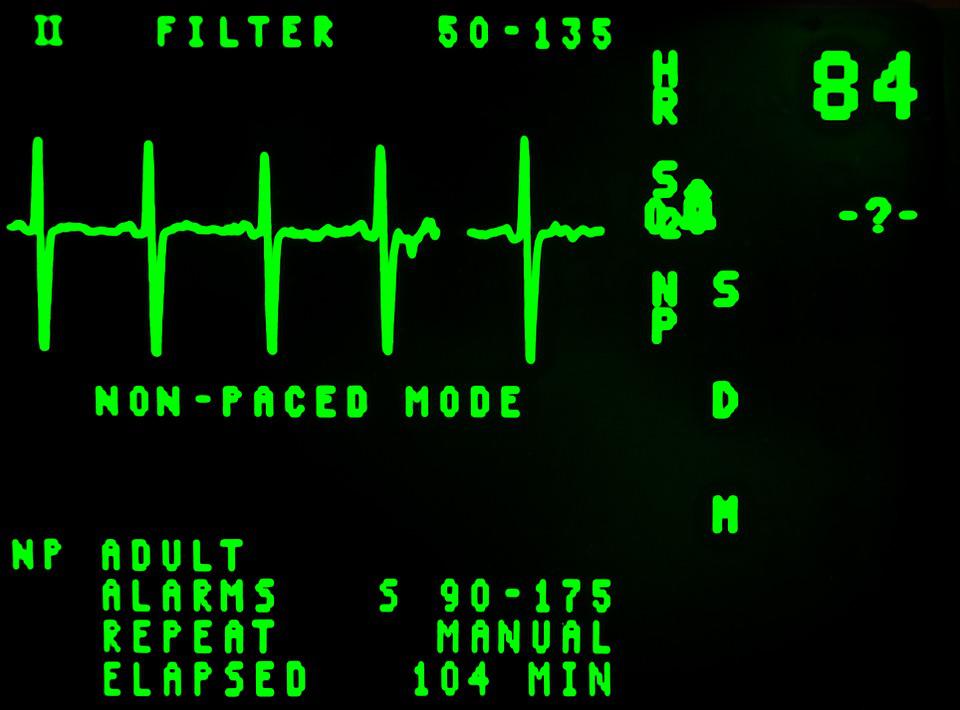

A Transformer-based approach for processing electrocardiograms is practical for boosting emotion recognition ability.

Author:Suleman ShahReviewer:Han JuDec 21, 20225.7K Shares229.6K Views

A Transformer-based approach for processing electrocardiograms is practical for boosting emotion recognitionability.

The Transformer's attention processes may be utilized to create contextualized representations of a signal, assigning greater weight to pertinent sections.

A fully connected network can then look at these representations to determine how people feel.

A team of researchers headed by Juan Vazquez-Rodriguez and Grégoire Lefebvre from the University of Grenoble Alpes in France collected numerous ECG datasets with no emotion labels to pre-train a model they subsequently fine-tuned for emotion identification using the AMIGOS dataset.

They use self-supervised learning to overcome the relatively modest number of datasets containing emotional descriptors.

Their technique achieved state-of-the-art performance for emotion identification using ECG data on AMIGOS.

The research demonstrated that transformers and pre-training are potential ways of recognizing emotions using physiological information.

Binary Emotion Recognition

A downstream task of binary emotion detection (high/low levels of arousal and valence) was tested on ECG signals.

The datasets utilized were presented, preprocesses were applied, and two phases of pre-training and fine-tuning were parametrized.

In this dataset, 40 respondents saw films specifically designed to elicit feelings.

Following each video, they do a self-assessment of their emotional condition.

Subjects assessed their degrees of arousal and valence on a scale of 1 to 9.

37 of the 40 individuals viewed 20 videos, while the other three subjects only watched 16 films.

ECG data were collected on both the left and right arms throughout each experiment.

To fine-tune our model, we exclusively utilized data from the left arm.

AMIGOS contains a preprocessed version of the data that has been down-sampled to 128Hz and filtered using a low-pass filter with a cut-off frequency of 60Hz.

These pre-processed data sets were utilized in studies, including the pre-training phase.

The ECG data utilized for fine-tuning consists of around 65 hours of recordings.

Signal Pre-processing, Pre-training, And Fine-tuning

Signals were filtered using the 8th order Butterworth band-pass filter with 0.8Hz low and 50Hz high cut-off frequencies.

Pre-training the model on many datasets should reduce overfitting when fine-tuning one.

Moreover, the model without pretraining tended to overfit soon, but the pre-trained model did not.

These findings showed the value of pretraining Transformers.

The pre-trained model outperforms the untrained model inaccuracy and F1 score.

Instead of employing a pre-trained signal encoder, The researchers evaluated architecture by fine-tuning our model on AMIGOS with all parameters randomly initialized.

Since these evaluations yield arousal and valence ratings from 1 to 9, we utilize the average as a low or high threshold.

The dataset's emotional self-assessments were utilized as labels.

The model was fine-tuned using the AMIGOS dataset for emotion identification.

The receptive field size was chosen because it is equivalent to the usual ECG signal interval between peaks, 0.6-1s.

Thus, the receiver field is 113 input values or 0.88s.

The first layer and encoder output apply layer normalization.

The input encoder is a 3D-CNN with ReLU activation.

Both models have 83401 10-second segments pre-trained.

When testing our model using AMIGOS segments, we fine-tune the pre-trained model with the other half of AMIGOS.

Then two models were pre-trained, one utilizing half of the AMIGOS data and the other half.

Because AMIGOS was utilized for fine-tuning, the same segments could not be used for pretraining and evaluation.

For pretraining, they employ ASCERTAIN, DREAMER, PsPM-FR, PsPM-RRM1-2, PsPM-VIS, and AMIGOg.

Segments of 10 seconds are ultimately added.

Each subject's signal is normalized to have zero mean and unit variance.

Future Of Using Transformers For Emotion Recognition From ECG Signals

This study yielded a variety of viewpoints.

New pre-training tasks may be investigated; alternative approaches, such as contrastive loss or triplet loss, may be more efficient in ECG signal specificities than masked points prediction, which was employed in this study.

Extending this study to additional input modalities (EEC, GSR, and even non-physiological inputs like environmental sensors) and, in general, processing multimodal scenarios might be beneficial for boosting emotion identification ability.

Finally, bigger stmowith more data from different places will help us better understand how this method works.

Final Words

The researchers looked at using transformers to detect arousal and valence in ECG signals.

This technique employed self-supervised learning for pre-training using unlabeled data, followed by fine-tuning with labeled data.

The trials showed that the model generates robust features for predicting arousal and valence on the AMIGOS dataset and outperforms current state-of-the-art techniques.

This study showed that self-supervision and attention-based models, such as Transformers, may be effectively employed in emotion recognitionresearch.

Suleman Shah

Author

Suleman Shah is a researcher and freelance writer. As a researcher, he has worked with MNS University of Agriculture, Multan (Pakistan) and Texas A & M University (USA). He regularly writes science articles and blogs for science news website immersse.com and open access publishers OA Publishing London and Scientific Times. He loves to keep himself updated on scientific developments and convert these developments into everyday language to update the readers about the developments in the scientific era. His primary research focus is Plant sciences, and he contributed to this field by publishing his research in scientific journals and presenting his work at many Conferences.

Shah graduated from the University of Agriculture Faisalabad (Pakistan) and started his professional carrier with Jaffer Agro Services and later with the Agriculture Department of the Government of Pakistan. His research interest compelled and attracted him to proceed with his carrier in Plant sciences research. So, he started his Ph.D. in Soil Science at MNS University of Agriculture Multan (Pakistan). Later, he started working as a visiting scholar with Texas A&M University (USA).

Shah’s experience with big Open Excess publishers like Springers, Frontiers, MDPI, etc., testified to his belief in Open Access as a barrier-removing mechanism between researchers and the readers of their research. Shah believes that Open Access is revolutionizing the publication process and benefitting research in all fields.

Han Ju

Reviewer

Hello! I'm Han Ju, the heart behind World Wide Journals. My life is a unique tapestry woven from the threads of news, spirituality, and science, enriched by melodies from my guitar. Raised amidst tales of the ancient and the arcane, I developed a keen eye for the stories that truly matter. Through my work, I seek to bridge the seen with the unseen, marrying the rigor of science with the depth of spirituality.

Each article at World Wide Journals is a piece of this ongoing quest, blending analysis with personal reflection. Whether exploring quantum frontiers or strumming chords under the stars, my aim is to inspire and provoke thought, inviting you into a world where every discovery is a note in the grand symphony of existence.

Welcome aboard this journey of insight and exploration, where curiosity leads and music guides.

Latest Articles

Popular Articles