Human Activity Recognition - What Tools Are Used And How They Work

Human activity recognition is crucial in human-to-human contact and interpersonal relationships. It is tough to extract information about a person's identity, personality, and psychological condition.

Author:Suleman ShahReviewer:Han JuDec 30, 2022124.1K Shares2M Views

Human activity recognitionis crucial in human-to-human contact and interpersonal relationships. It is tough to extract information about a person's identity, personality, and psychological condition.

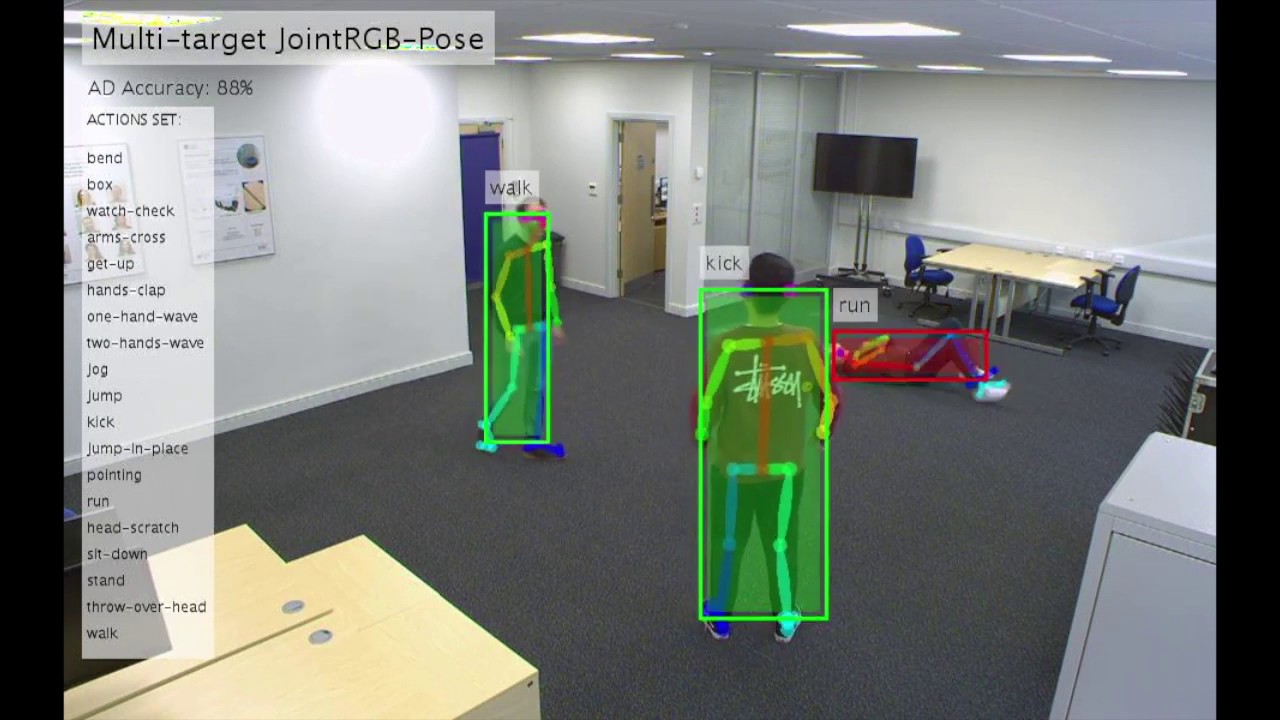

One of the critical objects of research in the scientific fields of computer vision and machine learning is the human capacity to identify another person's activity. A multimodal activity recognition system is required for many applications, including video surveillance systems, human-computer interfaces, and robotics for human behavior characterization.

What Is Human Activity Recognition?

Human activity recognition is an essential topic that focuses on recognizing a person's movement or action based on sensor data.

Movements are everyday indoor activities such as walking, conversing, standing, and sitting. They might also be more concentrated tasks, such as those conducted in a kitchen or manufacturing floor.

Sensor data, such as video, radar, or other wireless means, may be captured remotely. Data may also be collected directly on the subject, carrying specialized gear or smartphones equipped with accelerometers and gyroscopes.

Sensor data for activity detection has traditionally been difficult and costly, necessitating bespoke gear. Smart Smartphonesther personal tracking gadgets used for fitness and healthmonitoring are now inexpensive and widely available. As a result, sensor data from these devices are less expensive to acquire, more prevalent, and hence a more widely investigated form of the overall activity detection issue.

The goal is to forecast activity based on a snapshot of sensor data, often from one or a few sensors. This topic is often phrased as a univariate or multivariate time series classification challenge.

It is a difficult challenge since there are no clear or straightforward methods to match the recorded sensor data to particular human behaviors. Each subject may conduct an activity differently, resulting in variances in the recorded sensor data.

Human Activity Recognition Process

Most work in human activity identification assumes a figure-centric scenario with a clean backdrop. Complex actions may be broken down into more minor activities that are easier to identify. People conduct a movement by their habits, making choosing the underlying activity challenging. Building a real-time visual model for learning and understanding human emotions is also a difficult challenge. Human activity recognition seeks to assess activities in video sequences or still photos.

To address these issues, a task with three components is required:

- Background subtraction:In which the system attempts to separate the parts of the image that are invariant over time (background) from the objects that move or change (foreground)

- Human tracking:The system locates human motion over time; and (iii) human action and object detection, in which the system can localize human activity in an image.

- Human activities:These are classified as gestures, atomic actions, human-to-object interactions, group actions, behaviors, and events based on complexity.

Gestures are regarded as rudimentary motions of a person's bodily parts that may correlate to a specific person's activity. Atomic actions are motions of a person that describe a particular movement that may be part of a larger activity. Human-to-object or human-to-human interactions are interactions between two or more people or things. Group actions are activities carried out by a group of individuals. Human behaviors are physical activities related to an individual's emotions, personality, and psychological condition.

Unimodal Methods For Human Activity Recognition

Techniques for identifying human activities from data of a single modality are known as unimodal human activity identification methods. Most current techniques depict human activities as a collection of visual characteristics collected from video sequences or still photos. Multiple classification algorithms are used to detect the underlying activity label. For detecting human activities based on motion characteristics, unimodal techniques are acceptable.

Space-Time Methods

Space-time methods are concerned with identifying activities based on space-time properties or trajectory matching. A large family of approaches is based on optical flow, which has shown to be a helpful clue. Real-time action categorization and prediction analyze actions as 3D space-time shadows of moving persons.

Stochastic Methods

Many stochastic approaches, such as hidden Markov models (HMMs) and hidden conditional random fields (HCRFs), have been developed and employed by researchers to infer meaningful findings for human activity recognition. Each action is characterized by a feature vector in the stochastic approach, which incorporates information about location, velocity, and local descriptors.

There is growing interest in investigating human-object interaction for identification. Furthermore, identifying human motions from still photographs using contextual information such as surrounding objects is a hot issue. These approaches presume that the human body and its items may offer proof of the underlying activity. When playing soccer, for example, a soccer player interacts with a ball.

Human behaviors are often closely connected to the actor who performs a specific action. Understanding the actor and the move may be critical in real-world applications such as robot navigation and patient monitoring. Most extant works do not consider that a single action may be performed differently by multiple actors. As a result, simultaneous inferences of actors and actions are necessary.

Rule-Based Methods

Rule-based techniques represent an activity using rules or characteristics that characterize an event to identify continuing occurrences. Each activity is seen as a collection of basic rules/attributes, allowing the development of a descriptive model for human activity identification. While performing an activity, each subject must adhere to a set of rules. The recognition procedure was carried out using basketball game films. The players were first recognized and tracked, resulting in a collection of trajectories then utilized to generate a set of spatiotemporal events. The authors could determine which event happened using first-order logic and probabilistic techniques such as Markov networks.

Rule-based techniques cannot directly identify complex human behaviors. Thus, a breakdown into more minor atomic actions is used, and then the combination of individual steps is used to recognize complicated or concurrent activities.

Shape-Based Methods

Researchers have shown a strong interest in modeling human stance and look throughout the previous several decades. Parts of the human body are represented as rectangular patches in 2D space and as volumetric forms in 3D space. Many algorithms provide a plethora of information on how to solve this challenge. An action is classified using four distinct methods: frame voting, global histogramming, SVM classification, and dynamic temporal warping.

Graphical models have been extensively employed in 3D human posture modeling. A mix of discriminative and generative models improves human posture estimation. Amin et al. investigated multiview pose estimation. Poses from various sources were projected into 3D space using multiview pictorial structural models.

Integrating pose-specific and joint appearance of body parts contributes to a more compelling portrayal of the human body. The human skeleton was separated into five segments, with each section being utilized for training a hierarchical neural network. A hierarchical graph and dynamic programming were used to depict the human stance. A partial least squares technique was applied to learn the representation of human activity aspects.

The recognition procedures might be implemented in real-time using the incremental covariance update and on-demand closest neighbor classification techniques. The derived posture predictions are significantly utilized in action recognition. Human posture estimation is very sensitive to various circumstances, including light changes, viewpoint variations, occlusions, backdrop clutter, and human apparel. Low-cost technologies, such as Microsoft Kinect and other RGB-D sensors, may effectively exploit these constraints and provide a reasonably accurate estimate.

Multimodal Methods For Human Activity Recognition

Recently, there has been a lot of research on multimodal activity recognition algorithms. An event may be defined by many aspects that give additional helpful information. Several multimodal approaches are based on feature fusion, which may take two forms: early fusion and late fusion. The simplest method to reap the advantages of several features is to concatenate them in a more prominent feature vector and then learn the underlying action. Although this feature fusion strategy improves recognition efficiency, the resultant feature vector has a significantly bigger dimension.

Because multimodal cues are often associated with time, comprehending the data requires a temporal linkage between the underlying event and the various modalities. In this context, audio-visual analysis is employed in a variety of applications, including audio-visual synchronization, tracking, and activity detection.

Pose-driven Human Action Recognition and Anomaly Detection

Affective Methods

Emotional computing research models a person's capacity to express, recognize, and govern their effective emotions. Accurately labeled data is a critical challenge in affective computing. Preprocessing emotional annotations may hurt the generation of accurate and ambiguous affective models. Four main emotional aspects are examined: activation, expectation, power, and valence. The approach employs late fusion to merge auditory and visual data.

Although this system could effectively detect a person's emotional state, the computing overhead was significant. Deep understanding could quickly extract and choose the most useful multimodal characteristics using deep learning to model emotional expressions.

Behavioral Methods

Behavioral techniques seek to recognize nonverbal multimodal indicators such as gestures, facial emotions, and aural cues. A behavior recognition system may reveal information on a person's personality and psychological health. It has many applications, from video surveillance to human-computer interaction. A human activity recognition system uses the aural information in video sequences. The critical drawback of this strategy is that it employed distinct classifiers to learn the auditory and visual contexts independently.

Social Networking Methods

Social connections are an essential element of everyday living. The capacity to engage with other individuals via their activities is a crucial component of human conduct. Social interaction is a form of activity in which people adjust their conduct in response to the group around them. Most social networking platforms that influence people's behavior, such as Facebook, Twitter, and YouTube, track social connections and infer how such sites may be implicated in identity, privacy, social capital, youth culture, and education problems. Furthermore, the study of social interactions has piqued the attention of scientists, who hope to glean vital knowledge about human behavior. A new assessment on human behavior recognition gives a comprehensive overview of the most current approaches for automated human behavior analysis for single-person, multi-person, and object-person interactions.

Multimodal Feature Fusion

Consider the following scenario: multiple persons are engaged in a given activity/behavior, and some emit noises. A human activity identification system may detect the underlying action in the most basic scenario using visual input. However, the audio-visual analysis may improve identification accuracy since different persons may display various activities with comparable body motions but distinct sound intensity levels. The audio information may aid in determining who the subject of interest is in a test video sequence and distinguishing between various behavioral states.

The dimensionality of data from distinct modalities is a significant challenge in multimodal feature analysis. For example, video characteristics are substantially more complicated with more excellent dimensions than audio. Hence dimensionality reduction approaches are beneficial.

- Early fusion, also known as feature-level fusion, merges data from distinct modalities, often by lowering the dimensionality in each modality and generating a new feature vector that describes a person.

- The second kind of technique, known as late fusion or decision-level fusion, mixes various probabilistic models to learn the parameters of each modality individually.

- Slow fusion is a mixture of the preceding ways. It may be seen as a hierarchical fusion technique that slowly fuses data by passing information via early and late fusion stages.

People Also Ask

What Is A Problem Statement For Human Activity Recognition?

Activity detection is a significant issue in smart video monitoring. It is a critical challenge in computer vision, detecting human activity in surveillance films. These applications need real-time detection performance; however, detecting actual activity takes time.

Which Algorithms Use Human Activity Recognition?

Traditional machine learning methods such as regression, SVM, random forest, and othershave been utilized to recognize human activities.

What Is The Objective Of Human Activity Recognition?

Human activity recognition seeks to identify activities based on a sequence of observations of participants' behaviors and ambient variables. Many applications rely on vision-based human activity recognition research, including video surveillance, health care, and human-computer interface.

What Is Meant By Human Activity Recognition?

Human activity recognition (HAR) is a vast topic of research concerned with recognizing a person's individual movement or action based on sensor data. Movements are common indoor activities such as walking, conversing, standing, and sitting.

Conclusion

Vision in computers with Human activity recognition is a fascinating field of study. It is poised to transform various sectors, including healthcare, sports, and entertainment. While the possibilities are promising, 3D posture assessment remains a difficult job. A lack of in-the-wild 3D datasets, a vast searching state space for each joint, or occluded joints may all slow down and impair motion detection speed and accuracy.

On the other hand, deep neural networks have significantly enhanced output by automatically learning characteristics from raw data, making motion tracking a viable application.

Suleman Shah

Author

Suleman Shah is a researcher and freelance writer. As a researcher, he has worked with MNS University of Agriculture, Multan (Pakistan) and Texas A & M University (USA). He regularly writes science articles and blogs for science news website immersse.com and open access publishers OA Publishing London and Scientific Times. He loves to keep himself updated on scientific developments and convert these developments into everyday language to update the readers about the developments in the scientific era. His primary research focus is Plant sciences, and he contributed to this field by publishing his research in scientific journals and presenting his work at many Conferences.

Shah graduated from the University of Agriculture Faisalabad (Pakistan) and started his professional carrier with Jaffer Agro Services and later with the Agriculture Department of the Government of Pakistan. His research interest compelled and attracted him to proceed with his carrier in Plant sciences research. So, he started his Ph.D. in Soil Science at MNS University of Agriculture Multan (Pakistan). Later, he started working as a visiting scholar with Texas A&M University (USA).

Shah’s experience with big Open Excess publishers like Springers, Frontiers, MDPI, etc., testified to his belief in Open Access as a barrier-removing mechanism between researchers and the readers of their research. Shah believes that Open Access is revolutionizing the publication process and benefitting research in all fields.

Han Ju

Reviewer

Hello! I'm Han Ju, the heart behind World Wide Journals. My life is a unique tapestry woven from the threads of news, spirituality, and science, enriched by melodies from my guitar. Raised amidst tales of the ancient and the arcane, I developed a keen eye for the stories that truly matter. Through my work, I seek to bridge the seen with the unseen, marrying the rigor of science with the depth of spirituality.

Each article at World Wide Journals is a piece of this ongoing quest, blending analysis with personal reflection. Whether exploring quantum frontiers or strumming chords under the stars, my aim is to inspire and provoke thought, inviting you into a world where every discovery is a note in the grand symphony of existence.

Welcome aboard this journey of insight and exploration, where curiosity leads and music guides.

Latest Articles

Popular Articles